The XR Interaction Toolkit package is a high-level, component-based, interaction system. It provides a framework that makes 3D and UI interactions available from Unity input events. The core of this system is a set of base Interactor and Interactable components, and an Interaction Manager that ties these two types of components together. It also contains helper components that you can use to extend functionality for drawing visuals and hooking in your own interaction events.

XR Interaction Toolkit contains a set of components that support the following Interaction tasks:

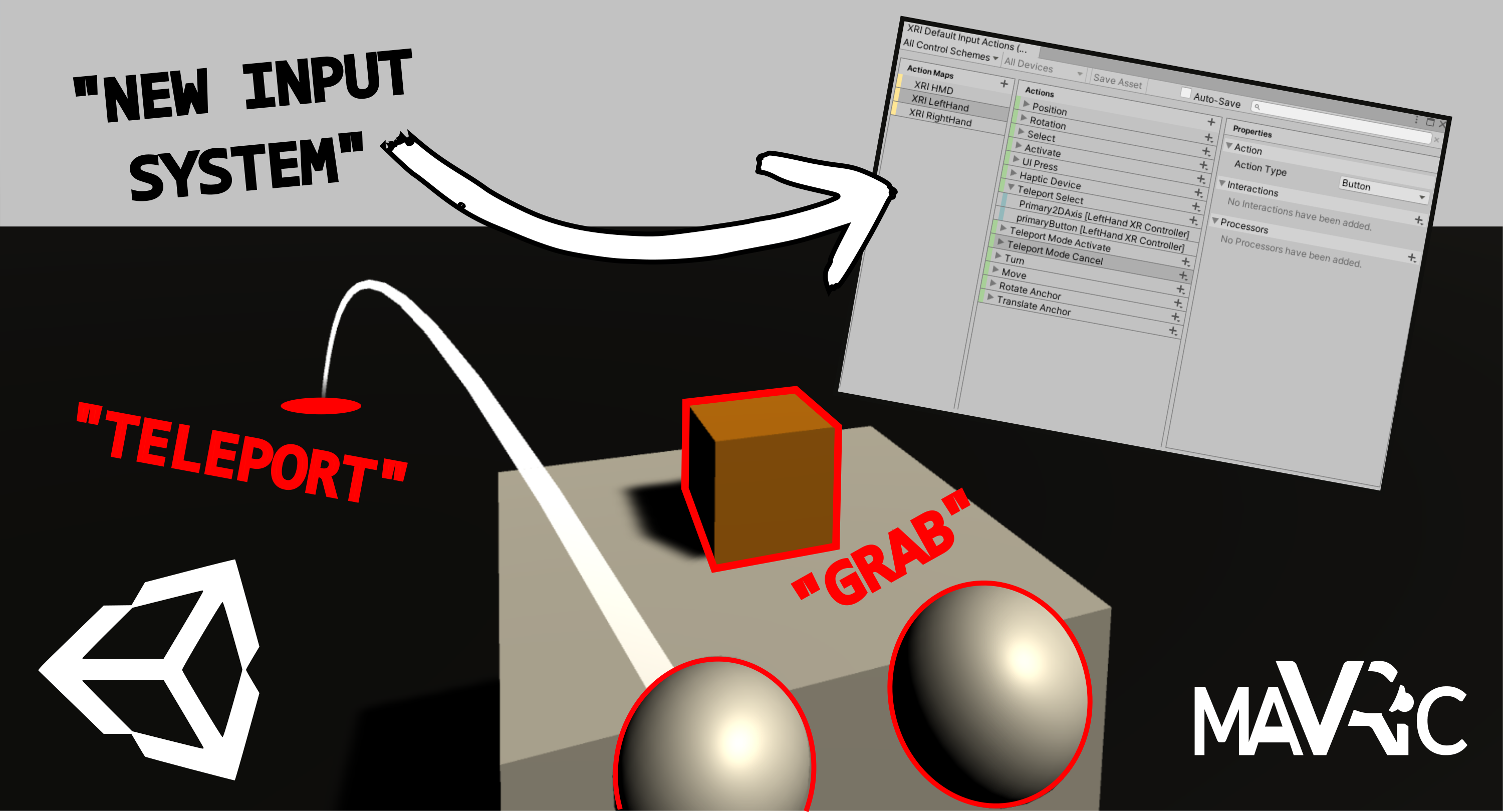

- Cross-platform XR controller input

- Basic object hover, select and grab

- Haptic feedback through XR controllers

- Visual feedback (tint/line rendering) to indicate possible and active interactions

- Basic canvas UI interaction with XR controllers

- A VR camera rig for handling stationary and room-scale VR experiences

To use these AR interaction components, you must have the AR Foundation package in your Project, The AR functionality provided by the XR Interaction Toolkit includes:

- AR gesture system to map screen touches to gesture events

- AR interactable can place virtual objects in the real world

- AR gesture interactor and interactables to translate gestures such as place, select, translate, rotate, and scale into object manipulation

- AR annotations to inform users about AR objects placed in the real world